Illustration by Fabrizio Lenci

After living in Japan for more than a decade, Rafael Bretas, originally from Brazil, speaks Japanese pretty well. Aspects of written Japanese, such as its strict hierarchies of politeness, still elude the postdoc. He used to write to senior colleagues and associates in English, which often led to misunderstandings.

Artificial-intelligence (AI) chatbots have changed all that. When the AI firm OpenAI, based in San Francisco, California, launched ChatGPT in November 2022, Bretas, who studies cognitive development in primates at RIKEN, a national research institute in Kobe, Japan, was quick to check whether it could make his written Japanese suitably formal. His hopes weren’t high. He’d heard that the chatbot wasn’t very good in languages other than English. Certainly, experiments in his own language, Portuguese, had resulted in text that “sounded very childish”.

But when he sent some chatbot-tweaked letters to Japanese friends for a politeness check, they said that the writing was good. So good, in fact, that Bretas now uses chatbots daily to write formal Japanese. It saves him time, and frustration, because he can now get his point across immediately. “It makes me feel more confident in what I’m doing,” he says.

Since ChatGPT’s launch, much has been written about its ability to disrupt professions, including fears of job losses and damaged economies. Researchers immediately began experimenting with the tool, which can assist in many of their daily tasks, from writing abstracts to generating and editing computer code. Some say that it’s a great time-saving device, whereas others warn that it might produce low-quality papers.

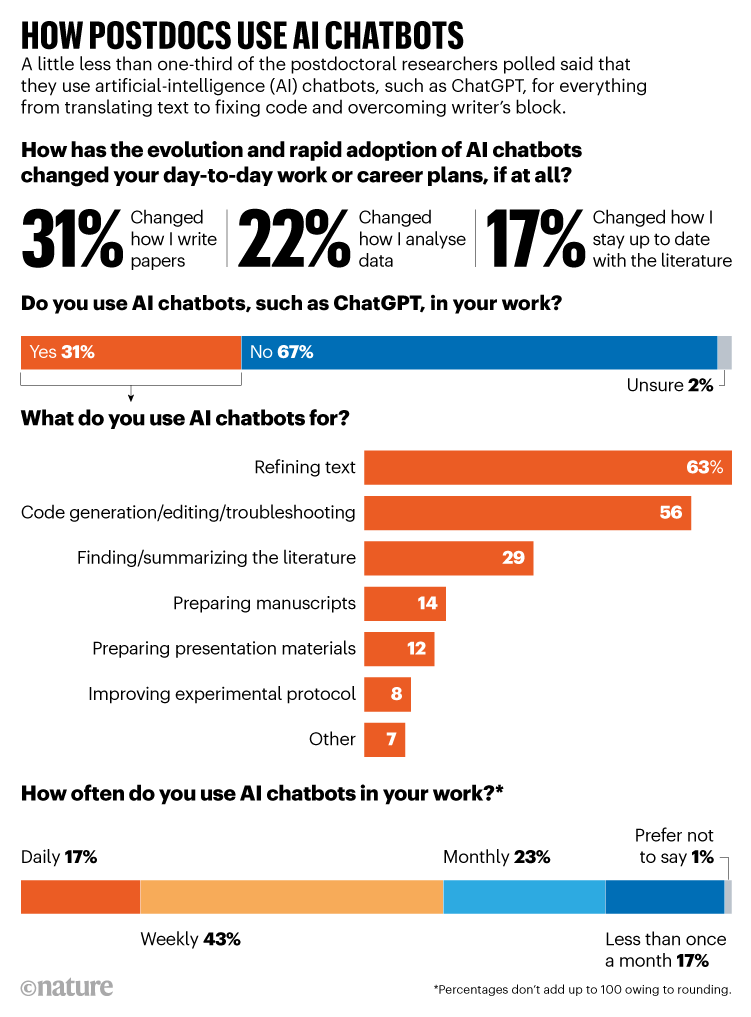

Last month, Nature polled researchers about their views on the rise of AI in science, and found both excitement and trepidation. Still, few studies have been published on how researchers are using AI. To get a better handle on that, Nature included questions about the use of AI in its second global survey of postdocs, in June and July. It found that 31% of employed respondents reported using chatbots. But 67% did not feel that AI had changed their day-to-day work or career plans. Of those who use chatbots, 43% do so on a weekly basis, and only 17% use it daily, like Bretas (see ‘How postdocs use AI chatbots’).

Those proportions are likely to change rapidly, says Mushtaq Bilal, a postdoc studying comparative literature at the University of Southern Denmark in Odense, who frequently comments on academic uses of AI chatbots. “I think this is still quite early for postdocs to feel if AI has changed their day-to-day work,” he says. In his experience, researchers and academics are often slow to adopt new technologies owing to institutional inertia.

Digital assistance

Table of Contents

It’s difficult to say whether the level of chatbot use found in Nature’s postdoc survey is higher or lower than the average for other professions. A survey carried out in July by the think tank Pew Research Center, based in Washington DC, found that 24% of people in the United States who said that they had heard of ChatGPT had used it, but that proportion rose to just under one-third for those with a university education. Another survey of Swedish university students in April and May found that 35% of 5,894 respondents used ChatGPT regularly. In Japan, 32% of university students surveyed in May and June said that they used ChatGPT.

The most common use of chatbots reported in the Nature survey was to refine text (63%). The fields with the highest reported chatbot use were engineering (44%) and the social sciences (41%). Postdocs in the biomedical and clinical sciences were less likely to use AI chatbots for work (29%).

Xinzhi Teng, a radiography postdoc at the Hong Kong Polytechnic University, says that he uses chatbots daily to refine text, prepare manuscripts and write presentation materials in English, which is not his first language. He might, he says, ask ChatGPT to “polish” a paragraph and make it sound “native and professional”, or to generate title suggestions from his abstracts. He goes over the chatbot’s suggestions, checking them for sense and style, and selecting the ones that best convey the message he wants. He says that the tool saves him money that he would previously have spent on professional editing services.

Ashley Burke, a postdoc who studies malaria at the University of the Witwatersrand in Johannesburg, South Africa, says that she uses chatbots when she has writer’s block and needs help “just getting the first few words on the page”. In those moments, asking ChatGPT to “write an introduction to malaria incidence in Zambia” results in a few paragraphs that can unlock her own creativity. She also uses the tool to simplify scientific concepts, either for her own understanding, or to help to convey them to others in simple language, which, she says, is “the most useful side of AI that I’ve found so far”. For example, while working on a methods section, she was unsure how to phrase the description of her DNA sequence analysis. She asked ChatGPT “how would you check DNA sequences for polymorphisms?” and it spat out a ten-step plan, starting with data collection and ending with reporting, which helped her to resolve the “sticky points” in her text.

Bilal says that the higher proportion of chatbot use among engineers and social scientists resonates with his own observations. However, he has found biomedical scientists keen to use chatbots, too, at least in Denmark. The prevalence of engineering postdocs who use chatbots to refine text (82%) concerns him because, to him, it signals that engineers are not suitably trained in scientific writing. “AI chatbots can address this issue to an extent but engineering programmes will have to invest in teaching writing. It is a very important skill for a scientist,” he says.

Some 56% of the postdocs who report using chatbots in Nature’s survey employ them to generate, edit and troubleshoot code. For example, Iza Romanowska, an archaeology postdoc at Aarhus University in Denmark, uses computational models to study ancient societies. She is self-taught in programming, so her code can be idiosyncratic. ChatGPT helps with that, she says. “It puts in conventions that I don’t know about, stuff that doesn’t have an impact on how the code works, but that helps others read it.” This is good for transparency, too, she adds, as many ad-hoc coders can view the effort of cleaning up their code as a deterrent to publishing it open source.

Archaeologist Iza Romanowska uses ChatGPT to troubleshoot her self-taught coding.Credit: Iza Romanowska

Antonio Sclocchi, a physicist doing a postdoc on machine learning at the Swiss Federal Institute of Technology Lausanne, also uses ChatGPT to code — paying for GPT-4, an updated version of the free tool, which he says performs better at some coding tasks. He also uses it when creating exam questions and illustrations in LaTeX, a document-preparation system.

Self-taught, self-regulated

Nature’s survey results make sense to Emery Berger, a computer scientist at the University of Massachusetts, Amherst. Although the proportion of postdocs who say that they use chatbots for work is lower than he anticipated, he says that he has seen a “shocking amount of scepticism” in academia towards AI tools such as ChatGPT. The people who criticize chatbots have often never even tried to use them, he notes. And when they have, they often focus on the problems, rather than trying to understand the revolutionary capabilities of the technology. “It’s like, you wave a wand and the Statue of Liberty appears. And one of the eyebrows is missing. But you just made the Statue of Liberty appear!”

Berger notes that chatbots can be fantastically useful for early-career researchers whose first language is not English. He thinks that these editing assistants are probably already playing a part in improving cover and application letters from students, as well as abstracts of papers submitted to journals, adding: “You can tell the English is a lot better.”

Rafael Bretas has used ChatGPT to help refine his written Japanese e-mails to colleagues.Credit: Rafael Bretas

Berger reckons that most postdocs seek out and try the AI tools on their own. Bretas, Romanowska and Sclocchi were all introduced to chatbots informally, by friends or colleagues. Of the three, only Bretas says that his institution has issued formal guidelines on how staff should use AI chatbots. RIKEN’s policy prohibits employees from putting information into chatbots that isn’t public or that is personal, because there are no guarantees that data entered into ChatGPT, for instance, stay private. The guidelines, released in May, also advise users to make sure that their chatbot use does not infringe on institutional rules on copyright, to ensure that they gather information from many different sources and to check the accuracy of the chatbot results individually.

Romanowska says that her university has not issued any formal guidelines or advice on how to use chatbots. This seems common: in the survey of Swedish students, 55% said that they did not know whether their institutions had guidelines for the responsible use of AI. “The only thing my university has put forward is a specification that students are not allowed to use ChatGPT for any assessments,” such as assignments or exams, Romanowska says. She describes this reaction as “quite naive”. “This is a tool that we have to teach our students. We’re all going to use it for work, and trying to pretend it doesn’t exist isn’t going to change this.”

Tina Persson, a careers coach based in Copenhagen, says that many of her early-career-researcher clients are pessimistic about AI tools. “This is bad for their careers,” she says, because industry — where many of them will probably end up, owing to the dearth of permanent academic positions — is rushing towards this new technology.

Banishing drudgery

Academia might be slower to take up AI; around two-thirds of the postdocs in the Nature survey did not feel that AI had changed their day-to-day work and career plans. However, of those who said that they do use AI chatbots, two-thirds said it had influenced how they work.

The postdocs interviewed for this article agreed that chatbots are a great tool for taking the drudgery out of academic work. Romanowska says that, for the students she supervises, she recommends using ChatGPT to code, especially when they are struggling to get their code to work. “It is very easy to copy and paste problematic code into ChatGPT and then ask what is wrong. Not only will it most often point out the problem, but it will also highlight other potential problems,” she says.

Mushtaq Bilal says that AI chatbots should not replace good training programmes for scientific writing.Credit: Kristoffer Juel Poulsen

Most of the interviewees also readily acknowledged the limitations of the tool. Bilal is concerned that 29% of surveyed postdocs said that they use it to find literature. These chatbots fabricate citations to papers that don’t exist, he says. “If one is not trained in using them, one may end up wasting a lot of time.”

Sclocchi says that things can certainly go wrong if users become lazy and rely on chatbots too much. When writing an article, the tools can suggest a structure or help rephrase paragraphs, he says. “But it is still up to you to decide which story to tell, how to tell it to your audience and how to synthesize the information you have.” Although using AI tools for coding speeds up his work, thinking about how he wants to structure his code and how his results relate to the rest of his field, is something that AI simply cannot do. “That requires some depth,” he says.

Romanowska feels that there is a clear distinction between the parts of her work that chatbots can help with, and the parts that they can’t. The grind of administrative work — answering reviewers’ comments, writing cover letters for manuscripts, applying for jobs, writing abstracts — these are technical skills that chatbots can help with, she says. But the scholarly work, which takes time, deep thought and ingenuity, chatbots can’t do. That, she says, is “the actual core of what we are supposed to be doing”.